Chapter 5.1 Classical Transformer

This content of this section corresponds to the Chapter 5.1 of our paper. Please refer to the original paper for more details.

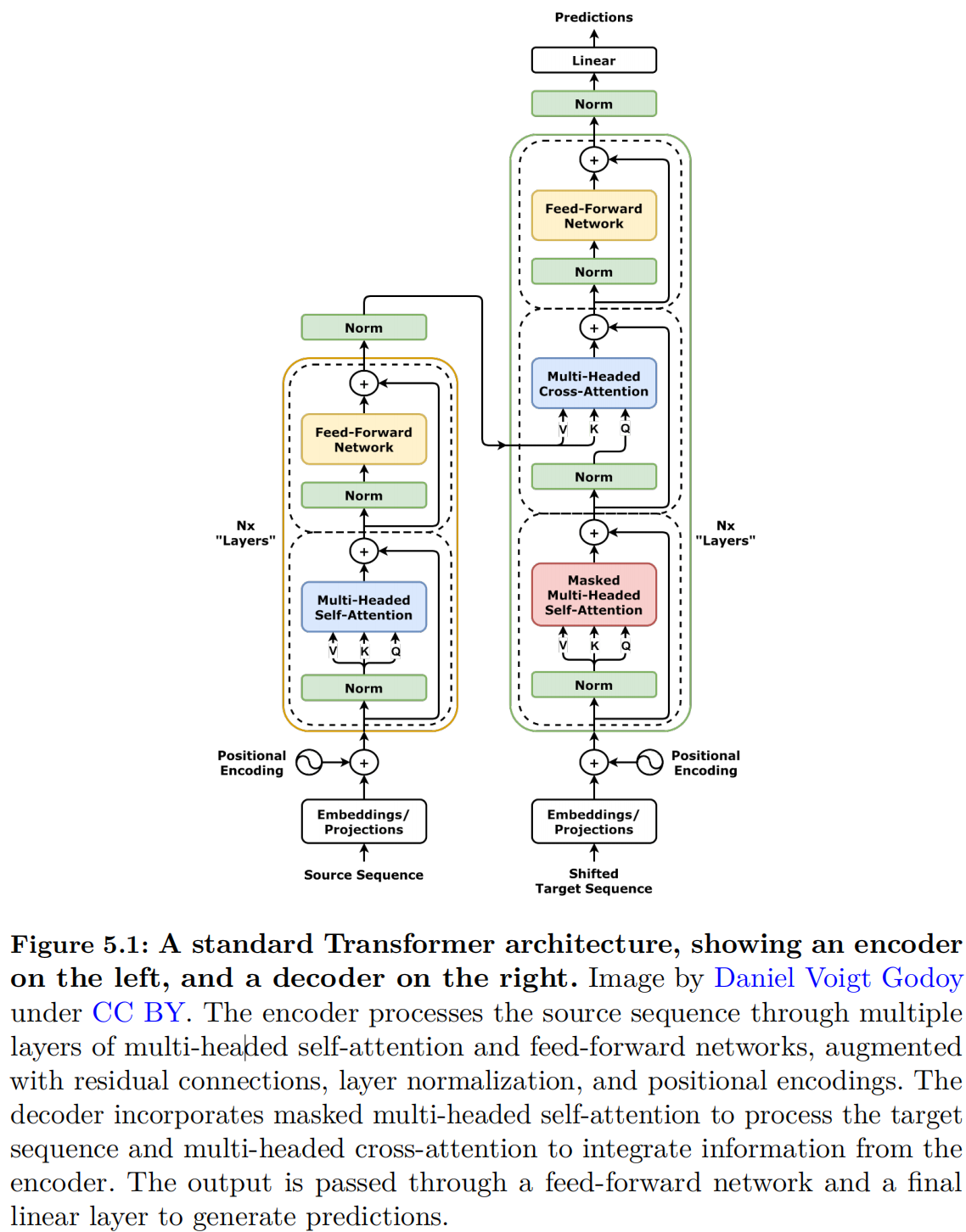

The transformer architecture is designed to predict the next token in a sequence by leveraging sophisticated neural network components. Its modular design—including residual connections, layer normalization, and feed-forward networks (FFNs) as introduced in Chapter 4.1—makes it highly scalable and customizable. This versatility has enabled its successful application in large-scale foundation models across diverse domains, including natural language processing, computer vision, reinforcement learning, robotics, and beyond.

The full architecture of Transformer is illustrated in Figure 5.1. Note that while the original paper by @vaswani2017attention introduced both encoder and decoder components, contemporary large language models primarily adopt decoder-only architectures, which have demonstrated superior practical performance.